Is Streaming’s ‘AI Slop’ Problem Even Worse Than We Thought? Spotify Bolsters ‘AI Protections for Artists,’ Says It’s Booted ‘Over 75 Million Spammy Tracks’ During the Past Year

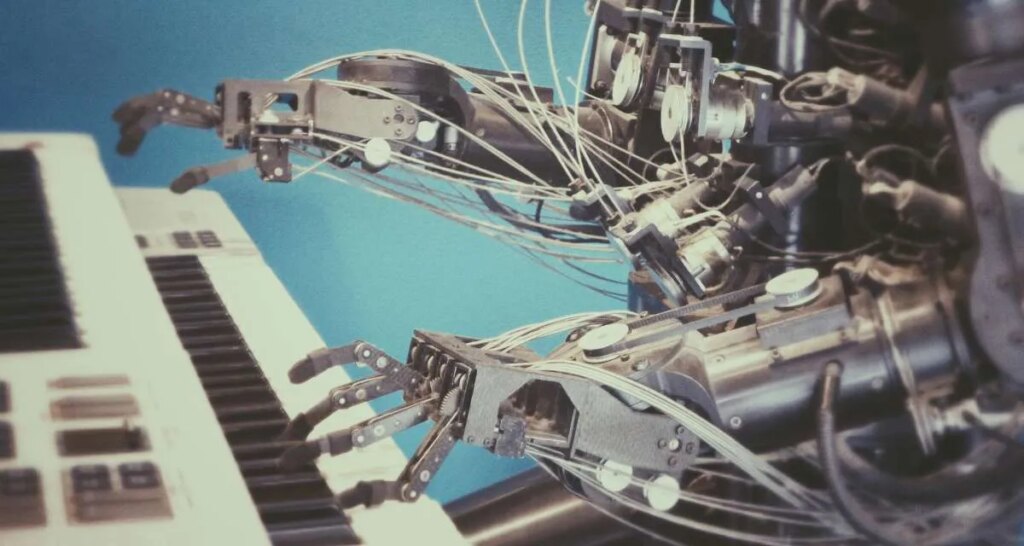

Photo Credit: Possessed Photography

Is streaming’s “AI slop” problem even worse than we thought? It sure seems that way, as Spotify says it’s nuked a staggering “75 million spammy tracks” during the past 12 months.

The DSP disclosed as much today in a wider update about a collection of enhanced “AI protections” for human artists and songwriters. Thanks in large part to recent months’ headlines, pretty much everyone is aware of machine-generated audio’s growing prevalence.

That includes unauthorized soundalike releases, ostensibly non-infringing music attributable to AI “artists,” AI audio (temporarily) hitting legitimate streaming profiles without permission, and more.

But the pernicious issue looks to run deeper than the overview suggests. Letting Spotify’s announcement take the wheel for a moment, during “the past 12 months alone, a period marked by the explosion of generative AI tools,” the service has booted “over 75 million spammy tracks.”

While the exact definition of “spammy” is unclear, in this context it presumably refers to the aforementioned unauthorized AI releases and/or machine-generated audio racking up fake streams.

However, the extensive takedowns didn’t decommission The Velvet Sundown, a seemingly non-infringing “act” billed as “a synthetic music project.” Nor did they eliminate associated “bands” like Moonshine Outlaws, which earlier this month dropped an album and a separate single with The Velvet Sundown.

Of course, that’s just the tip of the AI-audio iceberg; one needn’t search far to find other artist pages containing multiple digital images, several albums released on a rapid-fire schedule, and a variety of curious playlist placements.

Also excluded from the spammy-release purge: A number of identical instrumental “songs” attached to various profiles. Nearly 30 months after we reported on those brief uploads, a corresponding 49-track playlist – which doesn’t include all the relevant releases – has been hit hard by takedowns but still has 18 live works.

In other words, 75 million removals or not, Spotify certainly isn’t free of AI audio. Enter the initially highlighted AI protections, the first being a fresh “impersonation policy” aiming to curb tracks incorporating unapproved soundalike vocals.

As described by Spotify, it’s now fielding related claims – for any “song that uses a replica of another artist’s voice without that artist’s permission,” that is – through its illegal-content form. Evidently, then, it’ll be on the impacted professionals and their teams to assume identification and reporting duties.

And when it comes to releases hitting actual artists’ pages without approval – to the understandable dismay of their fans – the platform is said to be coordinating with distributors in an effort to halt the problem.

“We’re testing new prevention tactics with leading artist distributors to equip them to better stop these attacks at the source,” Spotify noted. “On our end, we’ll also be investing more resources into our content mismatch process, reducing the wait time for review, and enabling artists to report ‘mismatch’ even in the pre-release state.”

Next, Spotify intends to roll out “a new music spam filter” later this fall.

In a nutshell, the long-overdue system “will identify uploaders and tracks” employing “spam tactics” such as “mass uploads, duplicates, SEO hacks, artificially short track abuse, and other forms of slop,” per Spotify.

Also on the horizon is a “new industry standard for AI disclosures in music credits, developed through DDEX.” Though a good start, it doesn’t look like this so-called industry standard will go far enough (or, at a minimum, won’t be properly leveraged) out of the gate. Rather than tagging AI-generated tracks outright, the step will revolve around collecting creative-process particulars at the distributor and label level.

“The industry needs a nuanced approach to AI transparency, not to be forced to classify every song as either ‘is AI’ or ‘not AI,’” Spotify wrote.

At least in theory, tracks with AI vocals will eventually be identified as such on Spotify, with an appropriate tag for AI-generated instrumentals and so on. But the fundamental problem remains that machine-made audio can be pumped out in no time, is pouring onto DSPs in droves, and is stealing listeners as well as royalties from human talent.

Link to the source article – https://www.digitalmusicnews.com/2025/09/25/spotify-ai-protections-announcement/

-

Roland RT-30HR Dual Trigger for Hybrid Drumming$88,50 Buy product

-

Sawtooth EP Series Electric Bass Guitar with Gig Bag & Accessories, Surf Green w/Pearl Pickguard$239,98 Buy product

-

WestCreek RACER Solid Body Electric Guitar, Double Cut guitar, Rounded End Frets, Bone nut, Rosewood Fingerboard, Mahogany Body (Bumblebee)$219,99 Buy product

-

Civil War Era Brass Bugle US Military Cavalry Style Horn New by MB$48,99 Buy product

-

Glenn Cronkhite Custom Cases Cornet Bag Cornet Bag (Badlands Leather)$308,00 Buy product

-

On Stage XCG4 Velveteen Padded Tubular Guitar Stand – (2 Pack)$24,00 Buy product

Responses