Cornell Researchers Develop Light-Based Watermark to Detect Deepfakes

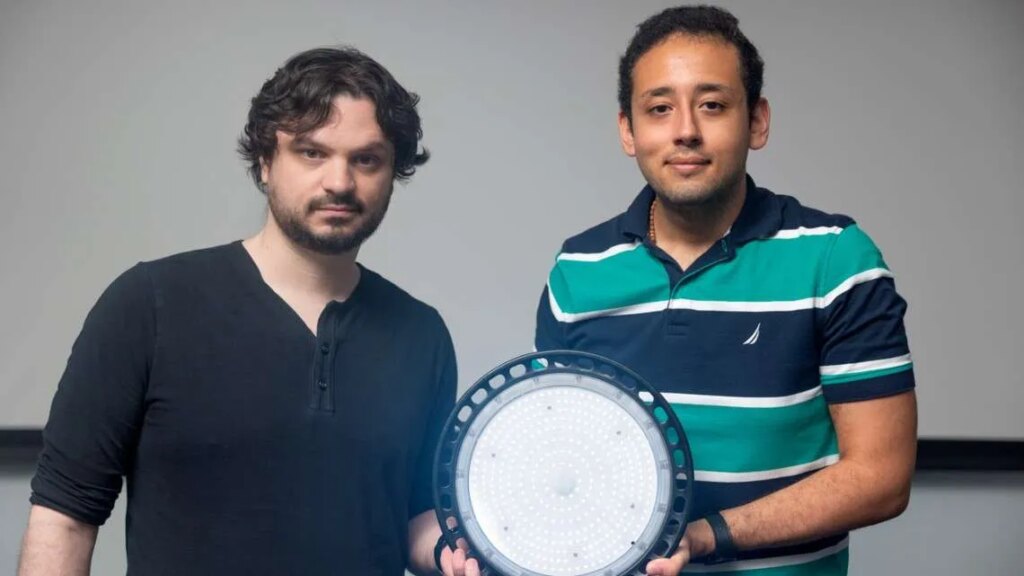

Photo Credit: Sreang Hok / Cornell University (Asst. Professor Abe Davis, Peter Michael)

A new wave of research out of Cornell University is making headlines in the fight against deepfakes. As synthetic media becomes more sophisticated and harder to distinguish from authentic content, the stakes for industries like music and film have never been higher.

Cornell’s latest innovation introduces a method to watermark video with imperceptible codes hidden in light sources used during recording. Developed by graduate student Peter Michael and conceptualized by assistant professor Abe Davis, the technique embeds unique, nearly invisible fluctuations in the lighting on-set. These minute variations can’t be seen by the human eye but are recognized by forensic analysis tools once the footage is recorded.

The light-based watermark is programmed to fluctuate in a way that encodes a secret signature within a video. When forensic analysis needs to verify a video’s authenticity, they search for this embedded signature. If the video content has been altered, the inconsistencies in the watermark pattern become obvious signs of signal tampering.

Critically, Cornell’s system allows for several layers of codes to be embedded simultaneously. Even if a bad actor learns how the system works, they must flawlessly replicate each code across all video frames, a task that grows exponentially harder with each additional light-based watermark layer. The technique has been tested successfully in both indoor and outdoor scenarios, and has worked consistently across a diverse range of subjects and skin tones—vital for the solution’s broad adoption in creative industries.

The implications for the music business—and for Hollywood—are profound. Synthetic content, when uncontrolled, can threaten artist reputation, brand value, and the integrity of creative works. Deepfakes also raise an urgent issue around Name, Image, and Likeness (NIL) rights, which have quickly become a cornerstone of artist contracts. NIL covers uses of an artist’s face, likeness, or digital persona beyond recordings and publishing, carrying substantial commercial value, especially for superstar acts.

Hollywood, too, faces real-world dilemmas. Disney reportedly shelved a film project that would feature AI-generated deepfakes of Dwayne “The Rock” Johnson—even with the actor’s consent. Studio lawyers recognized a legal and ethical quagmire: current U.S. copyright law does not protect AI-generated variations, and using them could expose creators to lawsuits or reputational damage.

Cornell’s light-based watermark system offers a potential way for studios, labels, and event organizers to verify footage authenticity before release. This could help prevent unauthorized NIL usage and shield artists, brands, and intellectual property from increasingly convincing forgeries.

Link to the source article – https://www.digitalmusicnews.com/2025/08/12/cornell-researchers-light-based-watermark/

-

JBL Wireless Two Microphone System with Dual-Channel Receiver, Black$99,95 Buy product

-

Arturia MiniLab 3 Mini Hybrid Keyboard Controller$109,00 Buy product

-

Jiayouy Guitar Effect Pedal Footswitch Toppers Foot Nail Cap Protection Cap for Guitar Effect Pedal Protection Cap Colorful 20PCS/Set$9,99 Buy product

-

Korg Liano, 88-Key Portable Digital Piano Semi-Weighted Keyboard (L1MSILVER)$329,99 Buy product

-

Squier by Fender 6-String Bass Guitar Classic Vibe Bass VI, with 2-Year Warranty, 3-Color Sunburst, Right-Handed, with Pickup Switches and High-Pass Filter Switch, Laurel Fingerboard$479,99 Buy product

-

Mackie MR Series, Studio Monitor 8-Inch with 65 Watts of Bi-Amplified Class A/B Amplification, Powered (MR824), Black$279,99 Buy product

Responses