Spotify’s Latest AI Mess Exposes the Platform’s Lax Controls — With Deceased Artists and Estates the Latest Targets

Photo Credit: Spotify

Yesterday, Digital Music News reported on AI-generated tracks appearing on a long deceased singer-songwriter’s official Artist Page. A Spotify spokesperson reached out to state the song has been removed—but the statement places the blame with the distributor SoundOn. Doesn’t Spotify bear some responsibility to artists and listeners to ensure this does not happen?

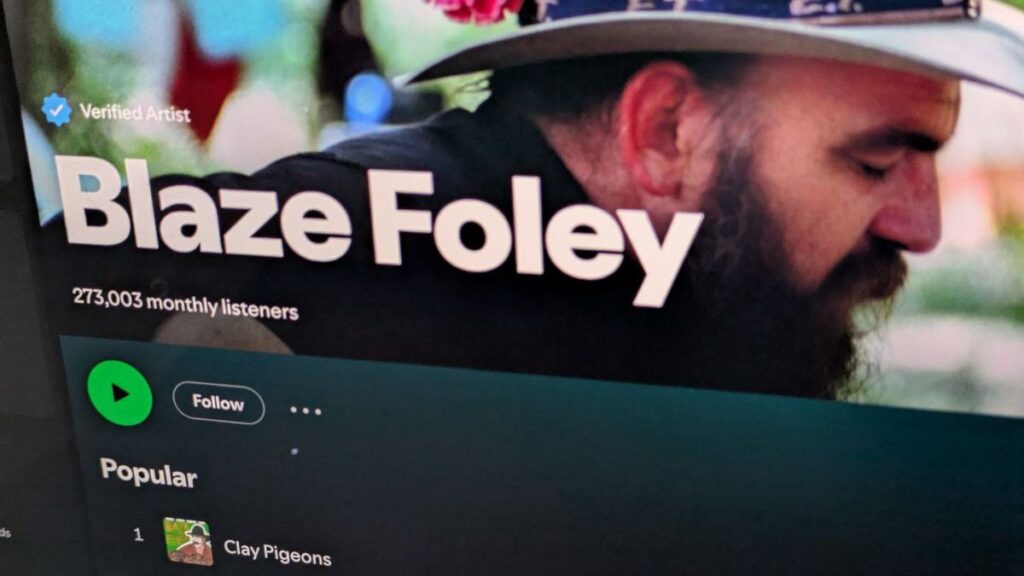

The modern music streaming world, for all its accessibility, has faced challenges in this brave new world powered by AI. The appearance of an AI-generated song titled “Together” on the official Spotify page of the late country icon Blaze Foley is a glaring example of how Spotify has failed to adequately protect both artists’ legacies and listener trust.

Spotify’s removal of the song after public outcry and reporting does not erase the incident—it raises questions about what Spotify can do to prevent this from happening in the future. Spotify’s statement to Digital Music News about the removal cites its ‘deceptive content policy’ that led to the takedown of this AI generated track. Here’s the statement below:

“We’ve flagged the issue to SoundOn, the distributor of the content in question, and it has been removed. This violates Spotify’s deceptive content policies, which prohibit impersonation intended to mislead, such as replicating another creator’s name, image, or description, or posing as a person, brand, or organization in a deceptive manner. This is not allowed. We take action against licensors and distributors who fail to police for this kind of fraud and those who commit repeated or egregious violations can and have been permanently removed from Spotify.”

Yet this reactive approach is cold comfort to rights holders and estate managers, many of whom may not monitor official Artist Pages on a daily basis. (Did you know Deezer estimates 18% of tracks delivered to that DSP are AI-generated now?) The fact remains: the song appeared on Foley’s official page without any input or approval from those who manage his legacy. The track even featured AI-generated artwork bearing no resemblance to the late artist.

Why Spotify’s Passive Policing Fails Artists & Listeners

The current system Spotify employs shifts the burden onto those least equipped to respond quickly. Family members, estate managers, and independent rights holders are required to actively patrol their deceased artists’ digital storefronts. This model is fundamentally flawed and leads to a worse experience for fans of the artist.

The impersonation occurs before intervention, leaving fans confused about a potential new release. Blaze Foley died in 1989 and has not released new music since then, but the 273,000 monthly listeners on Spotify were alerted that new music is available.

Distributors, seemingly with little oversight, can push music to Artist Pages, bypassing necessary human verification, especially for legacy or deceased Artist Page accounts. Why should this type of AI fraud be able to be perpetuated at all? Shouldn’t there be some form of preventing AI content from appearing on legacy artists’ pages? Even a notification from Spotify that new content is appearing on the page would go further than what Blaze Foley’s estate manager says he received.

Expecting managers and rights holders to catch every violation is unrealistic and unfair, particularly for deceased artists with limited active oversight. Big Tech has already solved this problem for little people when they die; Facebook offers a way for relatives and estate managers to memorialize their page (or permanently delete it). Spotify could do the same with deceased artists, requiring explicit permission from the estate or rights holders before new content could be added to the Artist Page.

Spotify says it is genuinely committed to protecting artistic legacies and preserving listener trust, but the reactive response does not indicate that. Stronger pre-release verification is essential in the Intelligence Age. For uploads tied to official Artist Pages on Spotify, a simple upload from a random distributor should not appear.

Any new work attributed to deceased or inactive artists should be subject to manual approval by an authorized estate representative before public release. Spotify should also engage in clearer labeling of AI-generated content by introducing a visible designation and user-facing filters for AI-originated tracks to prevent deception.

For every fraudulent upload that attracts public attention, countless others may slip through, eroding the credibility and value of Spotify’s role as a digital streaming platform. The Blaze Foley incident, alongside similar cases involving other classic artists, exposes how easy it is for bad actors—or careless distributors—to disrupt digital legacies.

Spotify’s business depends on the trust of listeners and goodwill of artists—alive or departed. Absent assertive reforms, the platform risks alienating estates, damaging revered catalogs, and undermining music’s cultural memory. It’s no longer enough for Spotify to clean up messes only after they’ve drawn outrage. The streaming giant must put serious gatekeeping where it belongs: before anything reaches an artist’s official page.

Link to the source article – https://www.digitalmusicnews.com/2025/07/22/spotifys-lax-controls-exposed-the-blaze-foley-ai-song-debacle/

-

Alesis Quadrasynth – Large unique original 24bit WAVE/Kontakt Multi-Layer Samples Studio Library$14,99 Buy product

-

pInstrument pCornet Plastic Cornet – Mouthpieces and Carrying Bag – Lightweight, Versatile, Comfortable Ergonomic Grip – Bb Authentic Sound for Student & Beginner – Durable ABS Construction – Blue$139,00 Buy product

-

Gammon Percussion 5-Piece Junior Starter Drum Kit with Cymbals, Hardware, Sticks, & Throne – Black$197,99 Buy product

-

Traveler Guitar Ultra-Light Acoustic-Electric Bass Guitar (ULB BKGMP)$399,99 Buy product

-

Ibanez AEGB30ENTG Acoustic-electric Bass – Natural$499,99 Buy product

-

Musical Instrument Bugle Brass Wind Army Brass Band Trumpet Metal Flugelhorn to Play Decorative Gift for Boys & Girls, Military Cavalry Scouting Trumpet Bugle with Mouthpiece (7 INCH)$35,50 Buy product

Responses